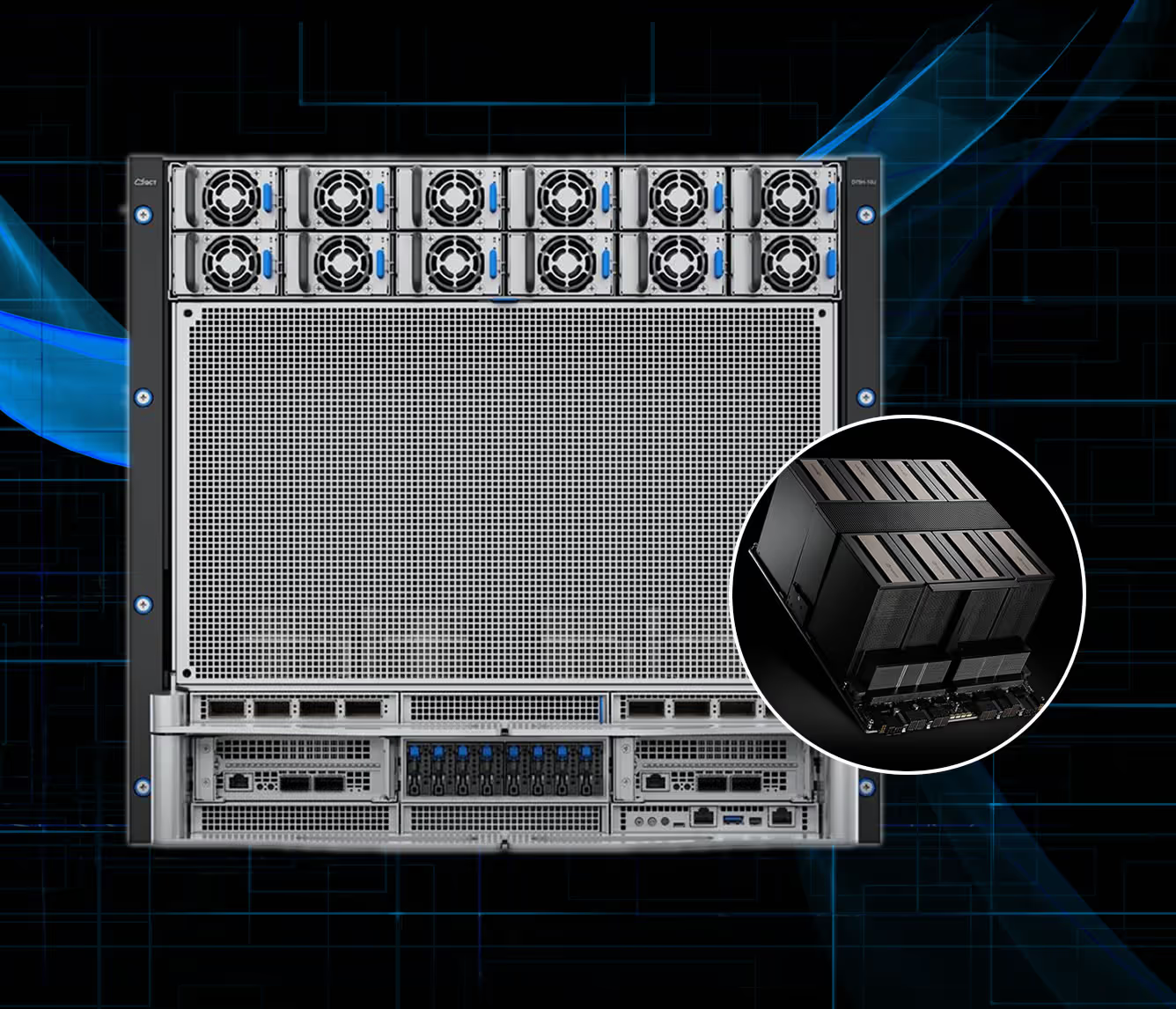

Pre-Order QuantaGrid D75H-10U with B300

A new scale of AI performance. The QuantaGrid D75H-10U with NVIDIA HGX B300 is a 10U air-cooled platform engineered for Hyperscale LLM Training, AI Reasoning, and Real-Time Agentic AI.

.png)

The Architectural Breakthrough: NVIDIA HGX B300

The same reference architecture used by hyperscalers, optimized for your enterprise AI workloads and data center requirements. However, if this exact model isn't the perfect fit, we also specialize in custom configurations and have access to alternative B300-based systems coming soon from partners like DTI, Aivres, Gigabyte, Supermicro, Lenovo, Dell, HPE and ASUS.

Core Platform Architecture

Blackwell & Second-Generation Transformer Engines

Powers LLMs and MoE workloads with 11x faster inference and 4x faster training, slashing costs for trillion-parameter models.

Grace CPU with NVLink-C2C

Unifies CPU-GPU memory, eliminating bottlenecks for massive AI datasets.

Dedicated Decompression Engines

Accelerates data preprocessing—a common bottleneck in AI pipelines. This ensures the GPUs are fully saturated with data, not sitting idle, which is critical for maximizing Return On Investment (ROI) on your AI infrastructure.

10U Air-Cooled Design

Deploys revolutionary performance with existing data center infrastructure. This isn't a prototype requiring specialized liquid cooling; it's a production-ready system you can integrate today, simplifying deployment and reducing operational overhead.

Your Toughest AI Challenges, Solved

You can now transform your most demanding AI workloads into opportunities for innovation. The QuantaGrid D75H-10U delivers the performance and efficiency for hyperscale applications, accelerating breakthroughs while reducing costs.

Train Foundational Models

Accelerate the training of trillion-parameter models, cutting development cycles from weeks to days with 4x faster performance. Rapidly pre-train and fine-tune state-of-the-art LLMs for any domain.

Deploy at Scale

Serve millions of simultaneous users in real-time with 11x faster inference. Power advanced chatbots, coding assistants, and analytical tools while slashing latency and operational costs.

Precision Engineered Peak Performance

QuantaGrid D75H-10U’s components maximize NVIDIA HGX B300’s potential ensuring uptime and efficiency in your data center.

Compute & Graphics (HGX B300)

- Baseboard: Single eight-accelerator baseboard design

- GPU Modules: 8x B300 GPUs with Blackwell architecture

- GPU Memory: 288GB HBM3e per GPU (2.3TB total)

- Interconnect: NVLink-C2C coherent fabric

Processor & Compute Options

- Processor Architecture: Multiple options available

- Intel Xeon 6: High-core-count configurations available

- Core Count Options: Various configurations to match workload needs

- Cache & Memory: Optimized for AI workload characteristics

Memory & Storage Subsystem

- System Memory: From 2TB DDR5, expandable configurations

- Memory Speed: 6400MHz RDIMM performance

Networking & Cluster Connectivity

- 4x FHHL PCIe Gen5 x16 SlotNetwork Interface: 8x OSFP ports per systems

- Fabric Options: 800G Ethernet or InfiniBand configurations

Expert Configuration and Deployment Support

We ensure your AI infrastructure is perfectly matched to your workloads, with comprehensive support from planning through production deployment.

Secure Your QuantaGrid D75H-10U

We are currently accepting deposits to reserve your B300 server for the upcoming Late October delivery window. Fill out the form to initiate the deposit process and finalize your order details with a Dataknox specialist.