Maximize your GPU clusters’ potential with our high-speed networking solutions—engineered to eliminate bottlenecks

Network Solutions for GPU Workloads

Accelerate data pipelines between GPUs, storage, and edge devices with ultra-low-latency architectures

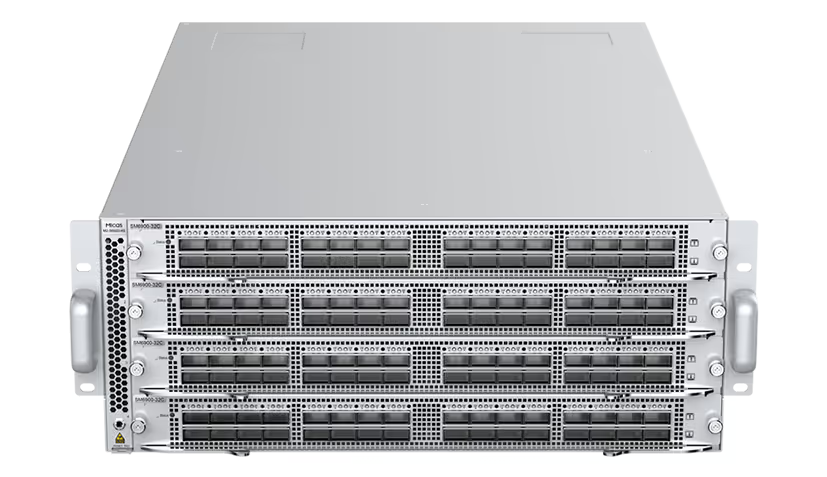

Micas Networks 400G 128P Tomahawk 5 Ethernet Switch

Its high-density 400GbE ports and RoCEv2 support enable seamless connectivity for large GPU clusters, making it a robust solution for AI-driven data centers.

Micas Networks M2-W6920-4S

The M2-W6920-4S is a high-density, 1U switch optimized for AI/ML applications, offering 12.8 Tbps bandwidth and 400GbE/100GbE connectivity.

Micas Networks 51.2T Co-Packaged Optics

By integrating optics with the switch chip, it overcomes copper interconnect limitations, delivering high bandwidth and low latency for AI/ML workloads.

Micas Networks M2-W6940-64OC

This high-density 800G Ethernet switch is designed to meet the demands of AI/ML workloads, offering ultra-high performance, low latency, and scalability for large-scale data centers.

Powering Next-Gen GPU Deployments

Enable seamless scaling for AI training, real-time inference, and distributed computing.

AI/ML Cluster Optimization

Edge AI Orchestration

Expert recommendations for Your Workflow

Get a Quote