High Performance Deep Learning and AI Server

Dataknox offers cutting-edge AI hardware solutions, revolutionizing AI applications and research with their High-Performance Deep Learning and AI Servers. These servers, equipped with advanced technology, are tailored for optimal performance in complex AI environments.

In the rapidly evolving landscape of AI and deep learning, where the demand for computational power is at an all-time high, High-Performance Deep Learning and AI Servers are crucial. These servers, designed to manage advanced algorithms and large datasets, are pivotal in accelerating deep learning tasks, ensuring faster data processing and shorter training times.

Multi-GPU Performance

Pre-Installed Frameworks & Toolchain

Standard 3-Year Warranty

Welcome to the Future of Computing with AI Servers

Dataknox distinguishes itself by incorporating top-tier GPUs from leaders like Nvidia, Intel, and AMD into their AI servers. Featuring cutting-edge models such as Nvidia's H100 and Gaudi2, our servers are designed for unparalleled performance in demanding AI and deep learning applications. This strategic selection of GPUs ensures that Dataknox servers are not just equipped for current computational challenges but are also primed for future advancements. Our commitment to integrating the best GPUs available underlines our dedication to delivering superior, future-ready AI hardware solutions.

Lenovo ThinkStation P360 Ultra

Compact workstation for edge AI and data science, supporting Intel Xeon W CPUs, 128GB DDR5, and NVIDIA T1000 GPUs. No standalone GPUs are included; it supports GPUs via PCIe.

Single Intel Xeon W-1200 Series processor (up to 10 cores)

Up to 128GB DDR5-4800 (4x DIMM slots)

Up to 2x 2.5” SATA/NVMe SSDs, plus 2x M.2 SSDs

2x PCIe 4.0 x16 slots (for NVIDIA T1000 or RTX A2000 GPUs)

Lenovo ThinkSystem SR630 V3

The Lenovo ThinkSystem SR630 V3 (part number 7D73CTO1WW) is a 1U server for AI inference and edge AI, supporting dual Intel Xeon CPUs, 4TB DDR5, and 3x NVIDIA or Intel accelerators.

Dual 5th Gen Intel Xeon Scalable processors (up to 144 cores each)

Up to 4TB DDR5-5600 (16x DIMM slots)

Up to 10x 2.5” NVMe/SATA/SAS hot-swap bays, plus 2x M.2 SSDs

Up to 3x PCIe 5.0 x16 slots (for NVIDIA A100, L40S, or Intel Gaudi 3 accelerators)

Lenovo ThinkSystem SR650 V3

The Lenovo ThinkSystem SR650 V3 is a 2U server for AI training and HPC, supporting dual Intel Xeon CPUs, 8TB DDR5, and 4x NVIDIA H100 GPUs. Highlighted at NVIDIA GTC 2025, it’s orderable now for scalable AI infrastructure.

Dual 5th Gen Intel Xeon Scalable processors (up to 144 cores each)

Up to 8TB DDR5-5600 (32x DIMM slots)

Up to 20x 3.5” or 40x 2.5” NVMe/SATA/SAS hot-swap bays, plus 2x M.2 SSDs

Up to 8x PCIe 5.0 x16 slots (for NVIDIA H100, A100, or L40S GPUs)

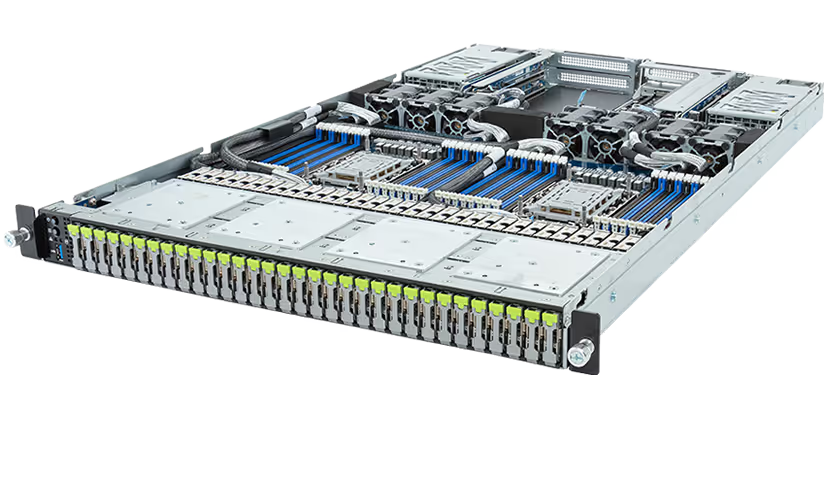

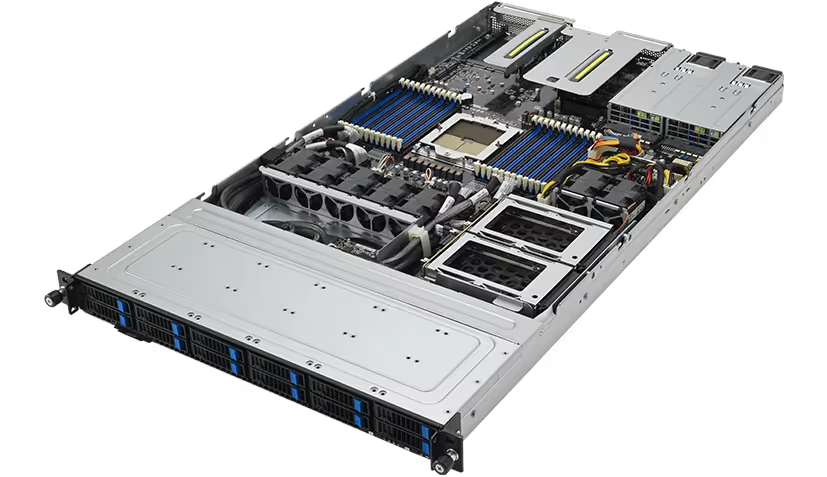

GIGABYTE R284-A91

The GIGABYTE R284-A91 (part number R284-A91-AAL3) is a 2U server for memory-intensive AI workloads, supporting dual Intel Xeon CPUs, 4TB DDR5, CXL memory, and 4x NVIDIA or Intel accelerators.

Dual Intel Xeon 6900 Series processors (up to 144 cores each)

Up to 4TB DDR5-5600 (24x DIMM slots), plus 16x Micron CXL memory modules

Up to 12x E3.S NVMe hot-swap bays, plus 2x M.2 SSDs

Up to 4x PCIe 5.0 x16 slots (for NVIDIA A100 or Intel Gaudi 3 accelerators), plus CXL expansion

GIGABYTE G493-SB0

The GIGABYTE G293-Z40 (part number G293-Z40-AF2) is a 2U server for AI inference and edge AI, supporting a single AMD EPYC CPU, 3TB DDR5, and 4x NVIDIA GPUs. Orderable now, it’s ideal for compact AI setups.

Dual 5th Gen Intel Xeon Scalable processors (up to 144 cores each)

Up to 4TB DDR5-5600 (16x DIMM slots)

Up to 12x 2.5” NVMe/SATA/SAS hot-swap bays, plus 2x M.2 SSDs

Up to 8x PCIe 5.0 x16 slots (for NVIDIA L40S, A100, or AMD Instinct MI300X GPUs)

GIGABYTE G894-SD1

The GIGABYTE G894-SD1 (part number G894-SD1-AAX5) is a 4U server for AI training and inference, supporting dual Intel Xeon CPUs, 8TB DDR5, and 12x NVIDIA B200 or Intel Gaudi 3 accelerators.

Dual Intel Xeon 6700 or 6500 Series processors (up to 128 cores each)

Up to 8TB DDR5-5600 (32x DIMM slots)

Up to 24x 2.5” NVMe/SATA/SAS hot-swap bays, plus 2x M.2 SSD.

Up to 12x PCIe 5.0 x16 slots (for NVIDIA HGX B200, H100, or Intel Gaudi 3 accelerators)

GIGABYTE S183-SH0 - Single AMD EPYC 9004 Series processor (up to 96 cores)

The GIGABYTE S183-SH0 (part number S183-SH0-AF1) is a 1U edge server for AI inference, supporting a single Intel Xeon CPU, 2TB DDR5, and NVIDIA A2/T4 GPUs.

Single 5th Gen Intel Xeon Scalable processor (up to 144 cores)

Up to 2TB DDR5-5200 (16x DIMM slots)

Up to 4x 2.5” NVMe/SATA hot-swap bays, plus 2x M.2 SSDs

2x PCIe 5.0 x16 slots (for NVIDIA A2 or T4 GPUs)

GIGABYTE G293-Z40

The GIGABYTE G293-Z40 (part number G293-Z40-AF2) is a 2U server for AI inference and edge AI, supporting a single AMD EPYC CPU, 3TB DDR5, and 4x NVIDIA GPUs. Orderable now, it’s ideal for compact AI setups.

Single AMD EPYC 9004 Series processor (up to 96 cores)

Up to 3TB DDR5-4800 (12x DIMM slots)

Up to 12x 2.5” NVMe/SATA hot-swap bays, plus 2x M.2 SSDs

Up to 4x PCIe 5.0 x16 slots (for NVIDIA A100 or L40S GPUs)

GIGABYTE G593-ZD2 Up to 6TB DDR5-4800

The GIGABYTE G593-ZD2 (part number G593-ZD2-AF2) is a 5U server for AI training and inference, supporting dual AMD EPYC CPUs, 6TB DDR5, and 10x NVIDIA or AMD GPUs. Orderable now, it’s ideal for AI factories with liquid-cooling.

Dual AMD EPYC 9004 Series processors (up to 96 cores each)

Up to 6TB DDR5-4800 (24x DIMM slots)

Up to 12x 3.5” or 24x 2.5” NVMe/SATA/SAS hot-swap bays

Up to 10x PCIe 5.0 x16 slots (for NVIDIA H100, A100, or AMD Instinct MI350 GPUs)

GIGABYTE G383-R80 - Dual 5th Gen Intel Xeon Scalable processors

The GIGABYTE G383-R80 (part number G383-R80-AF2) is a 3U server for AI training and HPC, supporting dual Intel Xeon Scalable CPUs, 8TB DDR5, and 8x NVIDIA H100 GPUs.

Dual 5th Gen Intel Xeon Scalable processors (up to 144 cores each)

Up to 8TB DDR5-5600 (32x DIMM slots)

Up to 24x 2.5” NVMe/SATA/SAS hot-swap bays, plus 2x M.2 SSDs

Liquid cooled NVIDIA HGX™ H100 with 8 x SXM5 GPUs 8 x PCIe x16 (Gen5 x16) low-profile slots

Lenovo ThinkSystem SR675 V3

2U AI-optimized server supporting dual AMD EPYC 9004 CPUs, up to 6TB DDR5, and 8x NVIDIA H100 GPUs.

Dual AMD EPYC 9004 Series processors (up to 96 cores each)

Up to 6TB DDR5-4800 (24x DIMM slots)

Up to 24x 2.5” NVMe/SATA/SAS hot-swap bays or 12x 3.5” bays

Up to 8x PCIe 4.0/5.0 x16 slots (for NVIDIA H100, A100, or L40S GPUs)

ASUS ESC8000-E12P Server

The ASUS ESC8000-E12P Server (part number ESC8000-E12P) is a 4U server for AI and HPC, supporting dual Intel Xeon 6 processors, up to 8TB DDR5, and 24x storage bays.

Dual Intel Xeon 6 Scalable processors (up to 144 cores each)

Up to 8TB DDR5-5600 (32x DIMM slots)

Up to 24x 2.5”/3.5” NVMe/SATA/SAS hot-swap bays

8x PCIe 5.0 x16 slots (for NVIDIA GPUs or Intel Gaudi 3 AI accelerators), 2x PCIe 5.0 x8 slots

ASUS RS700-E12 Server

The ASUS ESC8000A-E11 Server (part number ESC8000A-E11) is a 4U server for AI and HPC, supporting 8x NVIDIA A100 GPUs, up to 8TB DDR4, and 24x storage bays.

Dual AMD EPYC 7763 CPUs (64 cores each, Milan)

Up to 4TB DDR5-5600 (16x DIMM slots)

Up to 12x 2.5” NVMe/SATA hot-swap bays, optional 2x M.2 slots

2x PCIe 5.0 x16 slots (for NVIDIA GPUs or Intel Gaudi 3 AI accelerators), 1x PCIe 5.0 x8 slot

ASUS ESC8000A-E11 Server

The ASUS ESC8000A-E11 Server (part number ESC8000A-E11) is a 4U server for AI and HPC, supporting 8x NVIDIA A100 GPUs, up to 8TB DDR4, and 24x storage bays.

Dual AMD EPYC 7763 CPUs (64 cores each, Milan)

Up to 8TB DDR4-3200 (32x DIMM slots)

Up to 24x 2.5”/3.5” SATA/SAS/NVMe hot-swap bays

8x PCIe 4.0 x16 slots (for NVIDIA A100 PCIe 80GB GPUs), 2x PCIe 4.0 x8 slots

ASUS RS720A-E12-RS24 Server

The ASUS RS720A-E12-RS24 Server (part number RS720A-E12-RS24) is a 2U server for AI and HPC, supporting 8x NVIDIA H100 GPUs, 6TB DDR5, and 24x NVMe/SATA bays.

Dual AMD EPYC 9004 Series CPUs (up to 96 cores each)

Up to 6TB DDR5-4800 (24x DIMM slots)

Up to 24x 2.5”/3.5” NVMe/SATA hot-swap bays

8x PCIe 5.0 x16 slots (for NVIDIA H100 PCIe GPUs), 2x PCIe 5.0 x8 slots

ASUS RS501A-E12 Server

1U server for AI/HPC storage, certified for Weka’s software-defined storage and NVIDIA AI Data Platform.

Single AMD EPYC 9004 Series or Intel Xeon Scalable processor (up to 96 cores for EPYC)

Up to 3TB DDR5-4800 (12x DIMM slots)

Up to 4x 2.5” NVMe/SATA hot-swap bays or 2x M.2 slots

2x PCIe 5.0 x16 slots (for NVIDIA GPUs), 1x PCIe 5.0 x8 slot

ASUS ESC N4A-E11 Server

The ASUS ESC N4A-E11 Server (part number ESC-N4A-E11) is a 2U server for AI training and HPC, supporting 4x NVIDIA A100/H100 GPUs, up to 2TB DDR4, and 12x hot-swap storage bays.

Single AMD EPYC 7773X CPU (64 cores, Milan-X with 3D V-Cache)

Up to 2TB DDR4-3200 (8x DIMM slots)

Up to 12x 3.5”/2.5” SATA/SAS/NVMe hot-swap bays (configuration-dependent)

4x PCIe 4.0 x16 slots (for NVIDIA A100/H100 GPUs), 1x PCIe 4.0 x8 slot

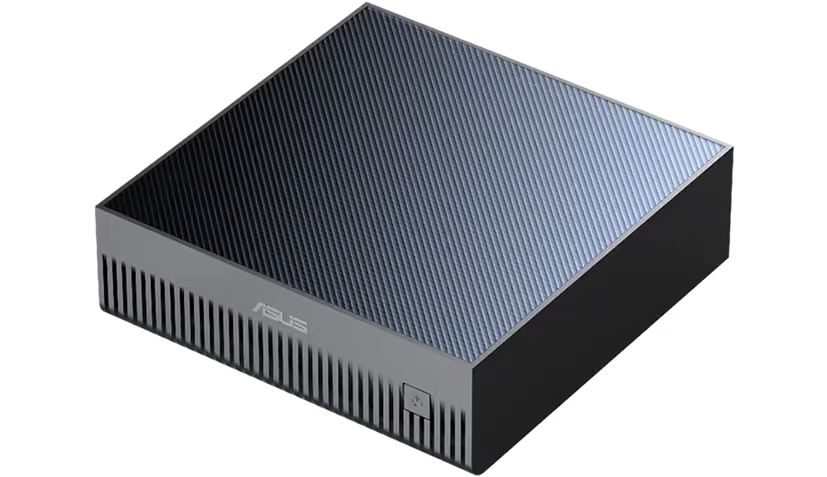

ASUS Ascent GX10

The ASUS Ascent GX10 (part number ASCENT-GX10) is a compact mini-PC powered by NVIDIA’s GB10 Grace Blackwell Superchip, delivering 1,000 TFLOPS for edge AI, LLM training, and inferencing.

NVIDIA GB10 Grace Blackwell Superchip (20-core Arm CPU with Cortex-X925 and Cortex-A725 cores)

128GB unified LPDDR5x memory

Up to 4TB M.2 NVMe SSD storage

Not applicable (integrated design, no PCIe slots)

Lenovo ThinkSystem SR685a V3

The SR780a V3 combines 8x latest NVIDIA GPUs with 5th Gen Xeon CPUs in a liquid-cooled 5U chassis for generative AI workloads

Supports up to 2 5th Gen Intel Xeon Scalable processors

Up to 96 GB per DIMM

8 x 2.5-inch hot-swap drive bays supporting PCIe 5.0 NVMe drives

8 x PCIe 5.0 x16 FHHL

Lenovo ThinkSystem SR780a V3

The SR780a V3 combines 8x latest NVIDIA GPUs with 5th Gen Xeon CPUs in a liquid-cooled 5U chassis for generative AI workloads

Supports up to 2 5th Gen Intel Xeon Scalable processors

Up to 96 GB per DIMM

8 x 2.5-inch hot-swap drive bays supporting PCIe 5.0 NVMe drives

8 x PCIe 5.0 x16 FHHL

Dell PowerEdge XE8545

Memory Module Slots: 32 slots (288-pin), 16 slots per processor.

Supports up to 2 AMD EPYC 7003 Series processors (3rd Generation, Milan), with up to 64 cores per CPU

8/16/32 DIMMs (2TB max with XE9640 GPU configuration)

Supports up to 10 x 2.5-inch hot-swappable drives

Supports up to 4 PCIe Gen 4 expansion slots

Dell PowerEdge XE9640

High-density 6U server supporting 8x GPUs for AI acceleration.

p to 2 Intel Xeon Scalable processors (4th or 5th Generation

8/16/32 DIMMs (2TB max with XE9640 GPU configuration)

Up to 4 x 2.5-inch NVMe SSD drives

Up to 4 PCIe Gen 5 slots (x16), designed for high-performance expansion

GIGABYTE G292-Z40

2U server with 4x H100 GPUs and 4TB memory for AI training.

Supports up to 2 AMD EPYC 7002/7003 Series processors

DDR4 RDIMM (Registered DIMM) or LRDIMM (Load-Reduced DIMM), 8-channel memory architecture per processor.

8 x 2.5-inch hot-swappable bays with a hybrid backplane

Supports up to 10 PCIe Gen 4 slots

HPE ProLiant DL380 Gen11

The HPE Apollo 6500 Gen11 Server features dual 4th/5th Gen Intel Xeon or AMD EPYC processors, supporting 8x high-performance GPUs for AI and HPC workloads.

DIMM Speed: Up to 5600 MT/s (DDR5)

DIMM Speed: Up to 5600 MT/s (DDR5)

24x NVMe U.2/EDSFF (368TB max) + dual M.2 boot drives.

PCIe 5.0 x16 slots + OCP 3.0 (200Gbps) for GPUs/NVMe/network expansion.

HPE Apollo 6500 Gen11

The HPE ProLiant DL380 Gen11 features dual Intel Xeon or AMD EPYC processors, supporting 4x GPUs for AI and virtualization.

Intel 4th/5th Gen Xeon Scalable (up to 64 cores) or AMD EPYC 9004 (up to 96 cores).

Memory Type: RDIMM/3DS RDIMM

32x NVMe U.2/EDSFF (max 491TB) + dual M.2 boot drives.

PCIe 5.0 x16 slots + OCP 3.0 (200Gbps) for GPUs/NVMe expansion.

PowerEdge XE8640 Rack Server

The PowerEdge XE8640 Rack Server features four NVIDIA® H100 GPUs, 4 TB max RDIMM memory, up to 122.88 TB storage with NVMe SSDs, and comprehensive security measures.

up to 56 cores per processor

Up to 4800 MT/s, RDIMM 4 TB max

Up to 8 x 2.5-inch NVMe SSD drives max 122.88 TB

2 CPU configuration: Up to 4 PCIe slots (4 x16 Gen5)

PowerEdge XE9640 Rack Server

The Dell PowerEdge XE9640 Rack Server features dual 4th Generation Intel® Xeon® Scalable processors, offering up to 56 cores per processor.

up to 56 cores per processor

RDIMM 1 TB max, Up to 4800 MT/s

Up to 4 x 2.5-inch NVMe SSD drives max 61.44 TB

2 CPU configuration: Up to 4 PCIe slots (4 x16 Gen5)

PowerEdge XE9680 Rack Server

The Dell PowerEdge XE9680 rack server is a high-performance, robust server designed to meet data-intensive workloads and complex computing tasks.

Two 4th Generation Intel® Xeon® Scalable processor with up to 56 cores per processor

32 DDR5 DIMM slots, up to 4800MT/s

Up to 8 x 2.5-inch NVMe SSD drives max 122.88 TB

Up to 10 x16 Gen5 (x16 PCIe) full-height, half-length

5688M7 Extreme AI Server Delivering 16 PFLOPS Performance

5688A7 is an advanced AI system supporting NVIDIA HGX H100/H200 8-GPUs to deliver industry-leading 16PFlops of AI performance.

2x 4th Gen Intel® Xeon® Scalable Processors, up to 350W

32x DDR5 DIMMs, up to 4800MT/s

24x 2.5” SSD, up to 16x NVMe U.2 2x Onboard NVMe/SATA M.2

SuperServer SYS-421GU-TNXR | Universal 4U Dual Processor

Ideal for High-Performance Computing, AI/Deep Learning, LLM NLP with 8TB DDR5 memory, 8 PCIe Gen 5.0 slots, flexible networking, 2 M.2 NVMe/SATA, and 6 hot-swap drive bays.

Dual 5th Gen Intel® Xeon® / 4th Gen Intel® Xeon® Scalable processors

Slot Count: 32 DIMM slots Max Memory: Up to 8TB 5600MT/s ECC DDR5

Hot-swap : 6x 2.5" hot-swap NVMe/SATA drive bays (6x 2.5" NVMe hybrid)

1 PCIe 5.0 x16 LP slot(s) 7 PCIe 5.0 X16 slot(s)

A+ Server AS-4125GS-TNRT

AI/Deep Learning optimized, supports 8 GPUs, dual AMD EPYC™ 9004 up to 360W, 6TB DDR5 memory, 4 hot-swap NVMe bays, 1 M.2 slot, AIOM/OCP 3.0 support. Ideal for high-performance computing.

Dual AMD EPYC™ 9004 Series Processors up to 360W TDP

Slot Count: 24 DIMM slots Max Memory: Up to 6TB 4800MT/s ECC DDR5 RDIMM/LRDIMM

24x 2.5" hot-swap NVMe/SATA/SAS drive bays (4x 2.5" NVMe dedicated)

9 PCIe 5.0 x16 FHFL slot(s)

SuperServer SuperServer SYS-421GE-TNRT 4U Dual Processor

Powered by 5th/4th Gen Intel® Xeon®, it offers 8TB DDR5 memory, 13 PCIe Gen 5.0 slots, AIOM/OCP 3.0 support, and 8 hot-swap SATA bays, ideal for high-end computing demands.

Up to 64C/128T; Up to 320MB Cache per CPU

Slot Count: 32 DIMM slots Max Memory: Up to 8TB 4400MT/s ECC DDR5 RDIMM

24x 2.5" hot-swap NVMe/SATA/SAS drive bays (8x 2.5" NVMe hybrid; 8x 2.5" NVMe dedicated)

12 PCIe 5.0 x16 FHFL slot(s)

SuperServer SYS-521GE-TNRT 5U Dual Processor

Meet the GPU SuperServer SYS-521GE-TNRT: A high-performance server with 5th/4th Gen Intel® Xeon® support, 8TB DDR5 memory, 13 PCIe Gen 5.0 slots, AIOM/OCP 3.0, and 8 hot-swap SATA bays. Ideal for data-intensive tasks.

Up to 64C/128T; Up to 320MB Cache per CPU

Max Memory (2DPC): Up to 8TB 4400MT/s ECC DDR5 RDIMM

8 x 2.5" Gen5 NVMe/SATA/SAS hot-swappable bays

Up to 10 double-width or 10 single-width GPU(s)

Gigabyte G593-SD0 HPC/AI Server - 4th/5th Gen Intel® Xeon® Scalable-5U

Intel's 4th & 5th Gen Xeon processors advance AI/deep learning. Featuring PCIe 5.0, High Bandwidth Memory for improved CPU performance. GIGABYTE supports with PCIe Gen5, NVMe drives, and DDR5 memory.

5th Generation Intel® Xeon® Scalable Processors 4th Generation Intel® Xeon® Scalable Processors

3DS RDIMM modules up to 256GB supported

8 x 2.5" Gen5 NVMe/SATA/SAS hot-swappable bays

Liquid cooled NVIDIA HGX™ H100 with 8 x SXM5 GPUs 8 x PCIe x16 (Gen5 x16) low-profile slots

Gigabyte G593-ZX2 HPC/AI Server - AMD EPYC™ Instinct™ MI300X 8-GPU

HPC/AI Server with dual AMD EPYC™ 9004 (up to 128-core/processor), 8 AMD Instinct™ MI300X GPUs, and 24 DDR5 DIMM slots. Ideal for AI, training, and inference.

Dual AMD EPYC™ 9004 Series processors TDP up to 300W

Memory speed: Up to 4800 MHz

8 x 2.5" Gen5 NVMe hot-swappable bays

Supports 8 x AMD Instinct™ MI300X OAM GPU modules 8 x PCIe x16 (Gen5 x16) low-profile slots

Gigabyte G593-SD1 4th/5th Gen Intel® Xeon® Scalable

AI and deep learning performance will benefit from the built-in AI acceleration engines, while networking, storage, and analytics leverage other specialized accelerators in the 4th & 5th Gen Intel Xeon Scalable processors.

5th Generation Intel® Xeon® Scalable Processors 4th Generation Intel® Xeon® Scalable Processors

32 x DIMM slots DDR5 memory supported only

8 x 2.5" Gen5 NVMe/SATA/SAS hot-swappable bays, NVMe

4 x PCIe x16 (Gen5 x16) FHHL slots 8 x PCIe x16 (Gen5 x16) low-profile slots

5180A7 High-Density 2-Socket Server with 4 Configurations

5180A7 is a high-density dual-socket server supporting AMD EPYC™ 9004 Processors that delivers high performance and reliability in different application scenarios.

1 or 2 AMD EPYC™ 9004 processors, TDP up to 400 W

24 DDR5 DIMM (12ch), support RDIMM/3DS DIMM, 4800@1DPC

Internal: 2x SATA M.2 SSD or 2x PCIe M.2 SSD

Up to 5x PCIe Gen5 slots (3x standard PCIe slots + 2x OCP 3.0 cards)

3280A7 Flexible 2U 1-Socket Server Supporting Up to 4 GPUs

3280A7 is a 2U server supporting one AMD EPYC™ 9004 processor. Its flexible configurations and ability to support up to 4 dual-width GPUs make it highly scalable for many applications including AI, high- performance computing..

1 AMD EPYC™ 9004 processor, TDP up to 400W

24 DDR5 DIMM, supports RDIMM/3DS DIMM, 12 Channels

Internal: 2x SATA/PCIe M.2 SSD 4x 3.5” SAS/SATA

Up to 8x standard PCIe slots (6 x16 FHHL + 2 x8 HHHL or 4 x16 FHHL + 4 x8 HHHL)

5698M7 6U 8-OAM AI Server for Next- Gen AI, LLM

5698M7 is an advanced AI system supporting 8 Habana® Gaudi®2 OAM accelerators and 2 Intel® Xeon® Scalable Processors. Leveraging the OAM form factor, it delivers scalable, high-speed performance for next-generation AI workloads and LLM.

2x 4th Gen Intel® Xeon® Scalable Processors, up to 350W

32x DDR5 DIMMs, up to 4800MT/s

24x 2.5” SSD, up to 16x NVMe U.2

8x low-profile x16 + 2x FHHL x16

5688A7 Extreme AI Server Delivering 16 PFLOPS Performance

5688A7 is an advanced AI system supporting NVIDIA HGX H100/A100 8-GPUs to deliver industry-leading 16PFlops of AI performance.

2x AMD EPYC™ 9004 Series processors

32x DDR5 DIMMs, up to 4800MT/s

24x 2.5” SSD, up to 16x NVMe U.2 2x Onboard NVMe/SATA M.2

5468M7 8 GPU AI Server Supporting Front/Back I/O & Liquid Cooling

5468M7 is a powerful 4U AI server with the latest PCIe Gen 5.0 architecture, supporting 8 FHFL PCIe GPU cards and 4th Gen Intel® Xeon® Scalable processors.

2x 4th Gen Intel® Xeon® Scalable Processors, up to 350W

32x DDR5 4800MT/s RDIMMs

24x 2.5” SSD, up to 16x NVMe U.2 2x NVMe/SATA M.2

8x FHFL double-width PCIe 5.0 GPU cards Supports additional 2x FL double-width PCIe GPU, 1x FL single-width PCIe

5468A7 8 GPU AI Server with PCIe 5.0

5468A7 is a powerful 4U AI server supporting AMD EPYC™ 9004 processors and the latest PCIe Gen 5.0 architecture. Its 10-card pass-through design significantly reduces CPU and GPU communication latency.

2x AMD EPYC™ 9004 processors, up to 400W

24x DDR5 4800MT/s RDIMMs

24x 2.5” SSD, up to 16x NVMe U.2 2x NVMe/SATA M.2

11x PCIe 5.0 x16 slots

GPUs in AI Servers

Dataknox incorporates top-tier GPUs from leaders like Nvidia, Intel, and AMD into their AI servers. Featuring cutting-edge models such as Nvidia's H100 and Gaudi2, our servers are designed for unparalleled performance in demanding AI and deep learning applications.

The Nvidia H100 GPU: A Dataknox Premium Offering

Dataknox harnesses the power of the NVIDIA H100 Tensor Core GPUs, a critical component behind the performance of advanced AI models, including platforms like ChatGPT. With this GPU, we guarantee unparalleled performance, scalability, and security across diverse workloads.

Features 640 Tensor Cores and 128 RT Core

80GB of memory (HBM3 for the SXM, HBM2e for PCIe

Up to 700W (configurable)

.png)

The NVIDIA L40 GPU: Dataknox's Elite Visual Powerhouse.

Revamp your infrastructure with Dataknox and NVIDIA's L40. Rooted in the Ada Lovelace design, it pairs advanced RT Cores with petaflop-scale Tensor Cores. With a performance leap doubling its predecessors, the L40 is essential for today's visual computing.

Features 640 Tensor Cores and 128 RT Core

48GB of memory capacity

The L40 GPU is passively cooled with a full-height, full-length (FHFL) dual-slot design capable of 300W maximum board power

Intel Gaudi 2: A Breakthrough in Deep Learning

Dataknox's AI servers, powered by Intel Gaudi 2, are not just hardware; they are a comprehensive solution for unlocking the potential of your data. Our servers seamlessly integrate with your existing IT infrastructure, providing a unified platform for AI development, deployment, and management.

24 Tensor Processor Cores (TPCs)

96GB of HBM2E with 2.45TB/s bandwidth

600 watts

AMD MI300X: The World's Fastest AI Accelerator

Dataknox presents the AMD MI300X, a cutting-edge addition to our AI server lineup. More than just hardware, it's a pivotal tool for AI innovation. Effortlessly integrating with your IT infrastructure, the MI300X by Dataknox offers a powerful, unified platform for AI development and deployment, unlocking new potentials in data intelligence.

up to 24 Zen 4 EPYC cores with CDNA 3 (GX940) General-Purpose GPU (GPGPU) cores.

up to 192 GB of (HBM3)